Building an AI model is a fascinating journey that combines data science, machine learning, & often a touch of creativity to solve real-world problems. In recent years, AI has transformed industries by enabling tasks such as image recognition, natural language processing, and predictive analytics. However, creating a functional and efficient AI model involves more than just coding—it requires a thoughtful approach to data preparation, model selection, training, and validation.

In this blog, Optimity Logics will walk through the essential steps to build a successful AI model, from defining the problem & gathering data to training, evaluating, and deploying the final model.

So, You can create significant AI applications by comprehending these basic procedures, regardless of how complicated the project you’re working on is.

What Is an AI Model?

An AI model is a core component of artificial intelligence systems that enables machines to learn from data and make decisions or predictions. Unlike traditional software that follows explicitly programmed rules, an AI model learns patterns & insights from examples during training, allowing it to perform complex tasks such as recognising images, understanding language, or predicting trends.

AI models are the building blocks behind intelligent applications across various fields, from healthcare to finance, providing solutions that can adapt & improve with experience.

An artificial intelligence model is a mathematical and computational framework creat to carry out operations that normally call for human intellect. Pattern recognition, decision-making, result prediction, and natural language comprehension are a few examples of these activities.

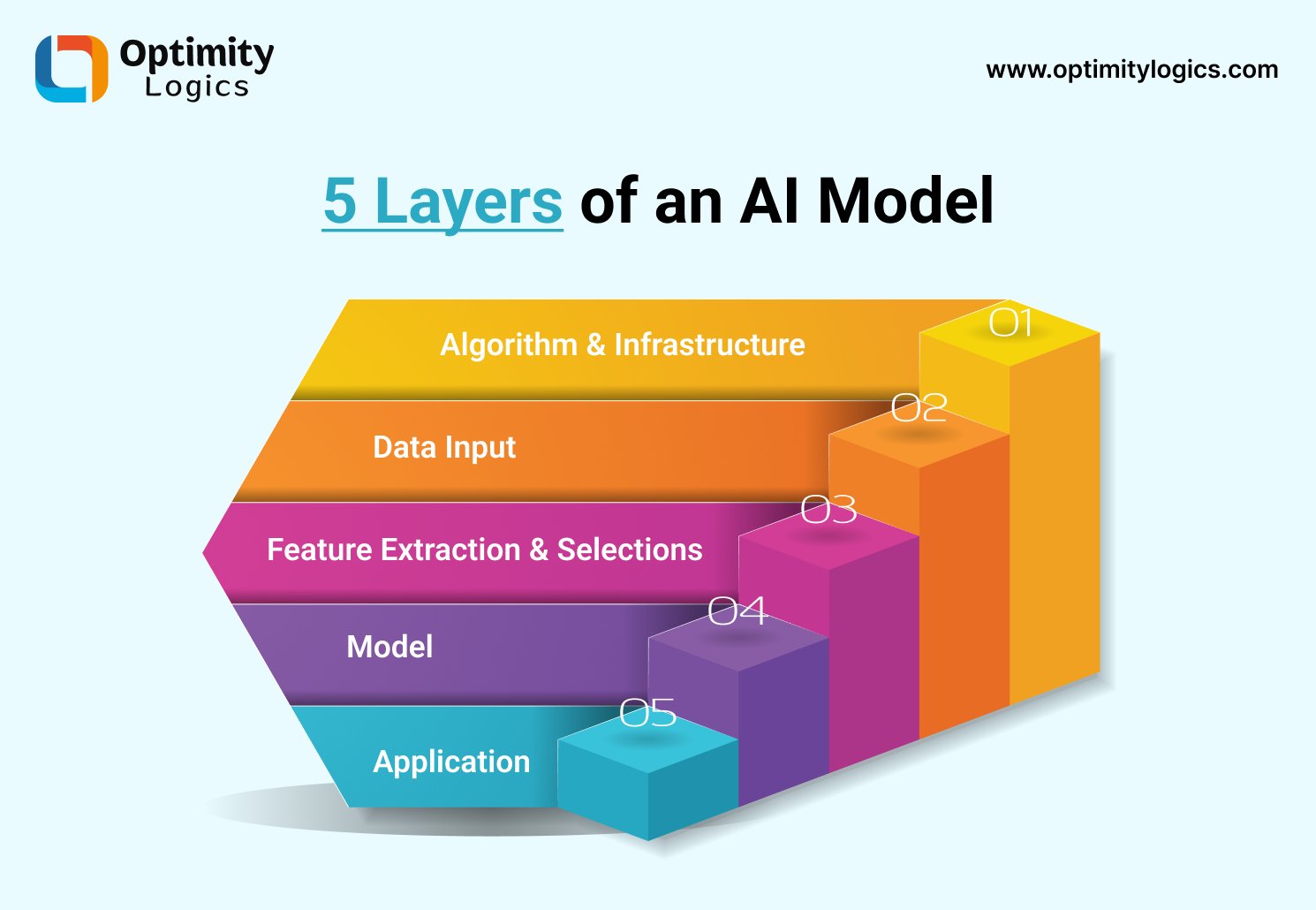

5 Layers of an AI Model

Optimity Logics shares some important layers of an AI Model. So, connect with us and read more!

1. Algorithm & Infrastructure Layer

The choice of algorithm is crucial as it defines the approach the model will use to learn from data. Algorithms can range from simple ones like linear regression to more complex deep learning architectures like convolutional neural networks for image processing or transformers for language understanding. Also, The algorithm choice depends on the type of problem, data, and desired outcomes.

AI models often require robust computing power, especially for training complex models on large datasets. So, Infrastructure refers to the computing environment that supports these needs, including hardware like Graphics Processing Units. Or Tensor Processing Units, and software frameworks like TensorFlow or PyTorch. Cloud platforms are also a part of the infrastructure, providing scalable resources for model training and deployment.

Together, the Algorithm & Infrastructure layer sets the stage for the AI model’s development, ensuring it has the necessary computational power and structure to learn efficiently and perform accurately.

2. Data Input Layer

The Data Input Layer is the stage where raw data enters the AI model, serving as the essential starting point for the model’s learning process. Also, This layer is responsible for preparing and delivering data in a format that the model can process effectively.

Data Input Layer prepares and formats raw data into a structured input, enabling the AI model to interpret it and move forward in the learning process. So, This layer is critical, as high-quality, well-prepared data improves the model’s ability to learn accurate and robust patterns.

3. Feature Extraction & Selections

Feature extraction involves transforming the original raw data into a set of measurable, informative attributes. It is especially important when working with high-dimensional data, such as images or text, as it helps the model focus on meaningful parts of the input. For example, in image processing, feature extraction might detect edges, textures, or colours; in text analysis, it might focus on word frequency or sentence structure.

Dimensionality reduction techniques, like PCA or t-SNE, are applied to reduce the number of features while preserving essential information. This step is particularly valuable for simplifying high-dimensional data, like gene expressions or image pixels, by focusing on the features that have the most significant impact.

The Layer optimizes the input data by isolating key characteristics and eliminating irrelevant or redundant information. This layer enhances the model’s performance by focusing on the most informative data, leading to faster training, improved accuracy, and reduced computational costs.

4. Model Layer

The Model Layer is where the AI model’s core learning and processing occur. It contains the architecture that learns from data patterns and transforms features into useful predictions or classifications. This layer varies in complexity depending on the type of model & task ranging from simple linear models to deep neural networks with many layers.

This layer’s structure, parameters, and learning mechanisms enable the AI to analyze data. Also, recognize patterns and make predictions, adapting to new data over time.

5. Application Layer

In the last phase of an AI model’s lifespan, known as the Application Layer, the trained model is implemented & incorporated into a practical app. This layer makes the model accessible to end users or systems that depend on its insights by putting its predictions. Also, judgements in real-world situations.

Essentially, the Application Layer ensures that the AI model meets practical needs & integrates seamlessly with humans or other systems, making it usable in a real-world setting. It provides end users with useful insights, forecasts, or choices by bridging the gap between the model’s internal operations & outward functionality.

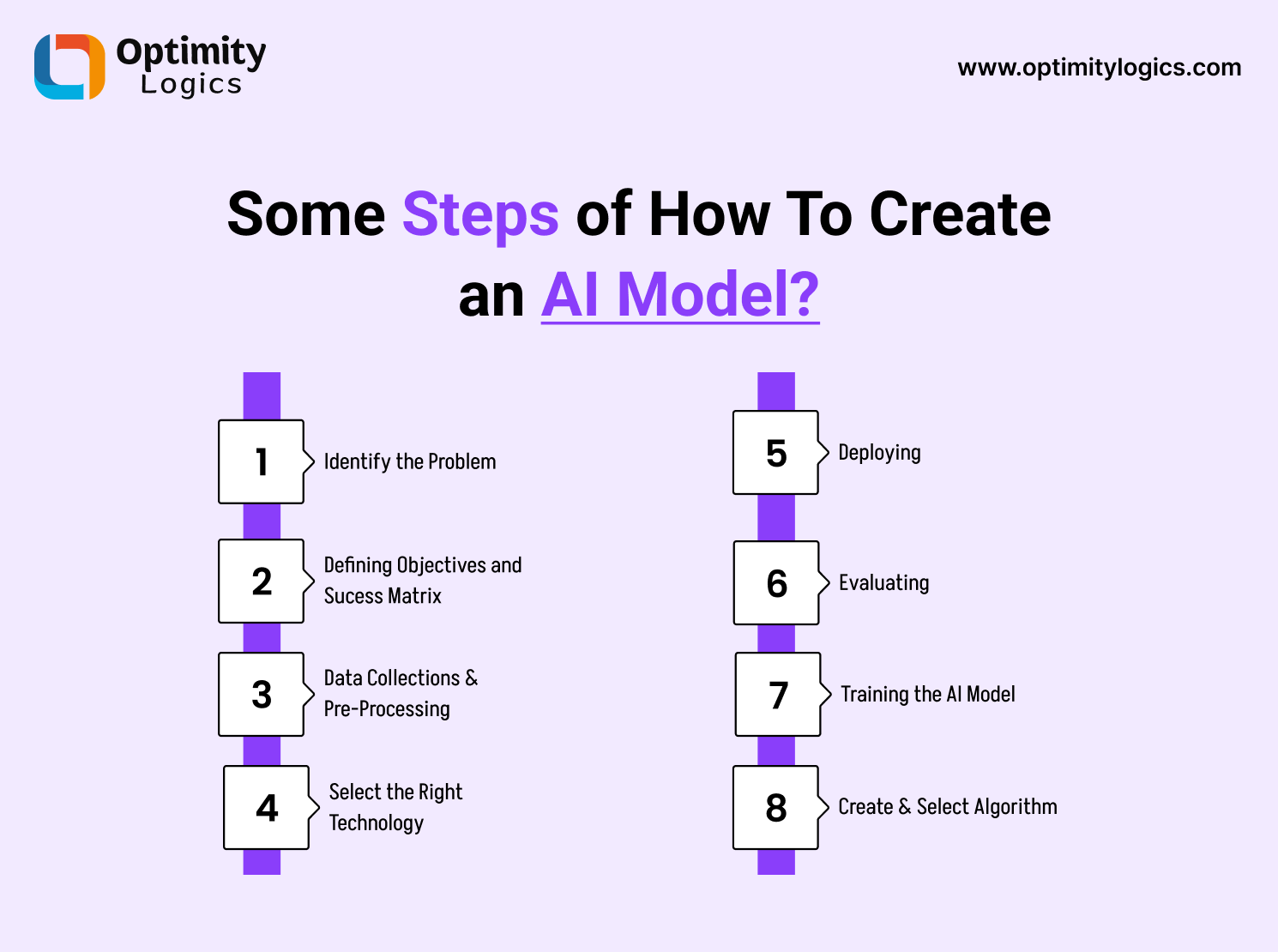

Some Steps of How To Create an AI Model?

Creating an AI model involves several key steps that transform raw data into a functional and accurate predictive system. Here’s a step-by-step guide on how to create an AI model:

– Identify the Problem

Importantly, Clearly defining the issue you wish to tackle is the first step in developing an AI model. This entails being aware of the particular difficulty, the environment in which artificial intelligence will be used, & the intended results.

A clear problem statement guarantees that the project has quantifiable goals and a targeted course. Also, Having the support of knowledgeable AI developers would be quite beneficial in this process.

– Defining Objectives and Sucess Matrix

Setting specific goals and success indicators is essential when the issue has been identified. While success metrics offer measurable standards to assess the model’s performance in terms of accuracy, precision & processing speed, objectives specify what the AI model seeks to accomplish.

Classification, prediction, generation, reinforcement learning, anomaly detection, recommendation, and other areas may be the focus of your AI model. Success criteria ought to be established in accordance with certain goals for the AI models.

For instance, you would want your AI model to concentrate on personalisation & recommend things to people who might be interested in purchasing them if you were running an online retailer like Amazon. Consider that the AI model is contributing to success metrics if the platform is increasing sales and relevancy.

– Data Collections & Pre-Processing

The basis of any AI model is data. So, The AI model can produce better outcomes if it is trained on objectives and success metrics that are more pertinent to it.

Therefore, this procedure of cleaning the data to eliminate discrepancies is frequently followed by our skilled data scientists for hire. To produce datasets pertinent to the problem domain, they handle missing values, normalise data scales, and extract features. Thus, they can train the AI models to provide output of the appropriate calibre.

– Select the Right Technology

Choosing the right framework or library, like TensorFlow, PyTorch, or sci-kit-learn, is essential, as each comes with unique advantages. For instance, TensorFlow and PyTorch are excellent choices for deep learning models, offering robust tools and support for complex neural networks and GPU acceleration.

In addition to selecting the right framework, it’s essential to choose the correct infrastructure for your model. Cloud platforms like AWS, Google Cloud, and Azure offer scalable, flexible environments for developing and deploying AI models, with tools for managing data, training models, & monitoring performance.

These platforms also provide specialized hardware, such as GPUs and TPUs, which can significantly accelerate training times for larger models. Alternatively, for projects with lower computational demands or specific security needs, on-premise infrastructure may be more appropriate. Ultimately, the chosen technology should support both the model’s initial development and its deployment requirements, ensuring that the solution is efficient, reliable, and scalable.

– Deploying

The process of deploying an AI model entails incorporating the learnt model into an actual setting so that it may generate insights or predictions. Generally speaking, the deployment process consists of stages like packaging the model, selecting a deployment environment, and making sure the model is accessible to end users or other systems, though it might vary based on the particular use case. Model packing is usually the initial stage in deployment when the trained model is stored in a way that makes it simple to load into production systems.

– Evaluating

Evaluating an AI model is a crucial step to ensure that the model is performing as expected and can generalize well to new, unseen data. Also, This phase helps to assess the model’s effectiveness in solving the defined problem and identify areas that may need improvement. Evaluation begins after training the model on a training dataset and involves testing it on a separate test dataset that the model has never seen before.

– Training the AI Model

Training an AI model is the process where the model learns patterns from the data and adjusts its internal parameters (such as weights and biases) to minimize errors and improve accuracy. So, It is a fundamental step in building an AI model, where the model iteratively learns from the training data and improves its ability to make predictions or classifications.

The training process is iterative, and finding the optimal parameters for the model is key to ensuring it performs well on unseen data. By carefully managing data, selecting the right algorithm, and adjusting hyperparameters, you can train an AI model that learns effectively and can make accurate predictions.

– Create & Select Algorithm

A crucial stage in the machine learning process is choosing and developing the best algorithm for an AI model. The model’s performance, learning capacity, and generalisation to new data are all directly impacted by the method selection.

It depends on the problem type (supervised, unsupervised, or reinforcement learning), the data size and complexity, and the specific task at hand. Once the algorithm is selected, it can be customized and fine-tuned through hyperparameter optimization and feature engineering to achieve the best performance.

Challenges Can Be Faced During AI Model Development

– Data Quality & Quantity Issues

– Ethical Issue

– Over & Underfeeting Issues

– Privacy Concerns

– Imbalance Data

– Integration with Existing System

– Real-time Interface Challenges

– High-Cost Deployment

Approx Cost To Create AI Model

The price range for developing an AI model is likely to be between $1,000 to $350,000, or perhaps millions of dollars. It can, however, differ depending on a number of variables, including the model’s complexity, the development methodology, the data requirements, the skills and knowledge, the location of the skills, the time required, and many more.

It can cost thousands of dollars to develop basic machine-learning models or AI-powered chatbots like Replika. However, more sophisticated systems that rely on huge data and deep learning, such as computer vision software, natural language processing software, or generative AI software development, can cost millions of dollars.

Furthermore, creating an AI model from scratch for your project type takes a lot more time and money than utilising cloud-based platforms or pre-existing solutions.

Using pre-trained models, open-source tools, and cloud-based platforms that provide AI services are always options if money is short.

You can speak with an AI development company to get more information about the price of building an AI model. They can then evaluate your particular requirements and provide you with a more precise quote.

How Optimity Logics Can Assist You in AI Model Development

Optimity Logics helps companies leverage artificial intelligence to tackle challenging problems and spur innovation by offering end-to-end support in AI model creation. Optimity Logics guarantees that every stage is managed with skill, from the first stage of determining business objectives and data requirements to deploying and maintaining the AI model.

They are experts at customising AI solutions whether through natural language processing, computer vision, or predictive analytics to fit particular business requirements.

They guarantee that your datasets are clean, normalised, and training-ready by providing comprehensive data preparation and preprocessing services.

Additionally, Optimity Logics chooses the best deep learning and machine learning algorithms and optimises them for optimal performance.